Design Fiction Projects

Grounded as much in imagination as reality, design fiction is about bending the rules. It’s about asking “What if?”, and using the remains to probe the edges of our changing world.

The results may only be props or prototypes — but the best ones, end up helping us navigate our near futures and the stories they share.

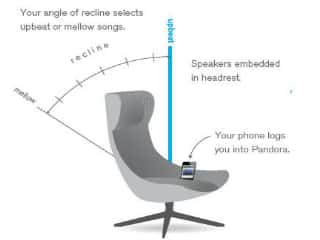

AN IKEA CATALOG FROM THE NEAR FUTURE

Ask yourself — in the IoT future, what role might Ikea play? They make 'things', don't they? And not just hokey, silly, confusing things, things...

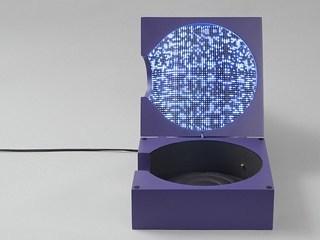

CONNECTED LAMP

Alamp capable of conveying an intangible emotional connection between patients at the hospital and their family and close friends. I also led...

HERTZIAN ARMOR

Design academics Anthony Dunne and Fiona Raby coined the term “Hertzian Space” to describe the invisible and energetic — but very real — world of...

MECHANOMICS

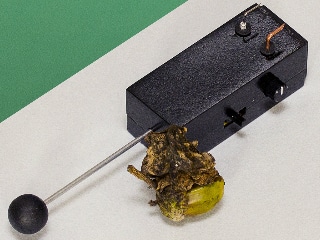

In the late 40’s and early 50’s Economics Professor Bill Phillips created a hydro-mechanical analogue computer, which used the movement of water...

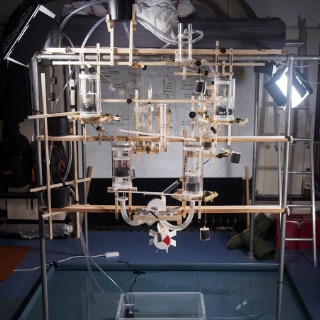

SPACEJUNK

The 5 twigs in this installation point in unison in the direction of the oldest piece of human made space debris currently above the horizon. The...

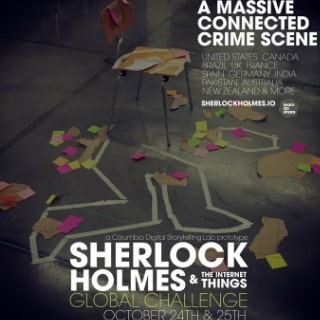

SHERLOCK HOLMES & THE INTERNET OF THINGS

Sherlock Holmes & the Internet of Things is an ongoing prototype developed and run by the Columbia University Digital Storytelling Lab that...

TEACHER OF ALGORITHMS

Teacher of Algorithms looks at another aspect of automated behavior: What do you do when your "smart" gadget's machine learning algorithm doesn't...

THE COW OF TOMORROW

“The Cow of Tomorrow” describes an extreme future use of animals taking notions of utility and domestication to a logical end.

A tiny...

THE RITUAL MACHINES

The project, a collaboration between the Royal College of Art’s Helen Hamlyn Centre for Design and the Digital Interaction Group at Newcastle...

THE SELFIE PLANT

In recent times, the selfie culture has risen in popularity, but it has also raised a few questions. Whether the Selfie culture helps to build...

UNINVITED GUESTS

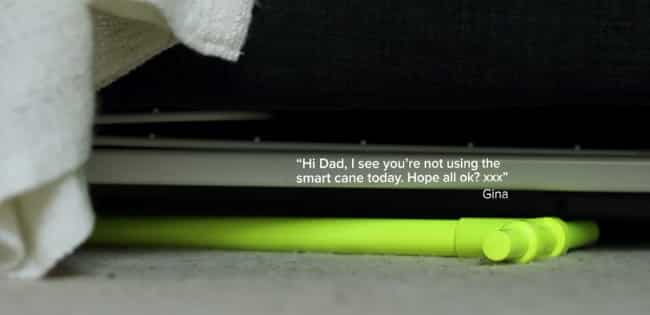

Thomas, aged 70, lives on his own after his wife died last year. His children send him smart devices to track and monitor his diet, health and...

WING

This robotised interactive object / installation looks like a wing, spreading 2,5 meters, hung on the height 3-4 meters. A thin cable is hanging...

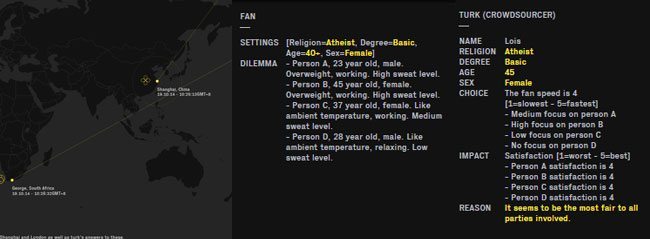

ETHICAL THINGS

The "Ethical Things" project looks at how an object, facing everyday ethical dilemmas, can keep a dose of humanity in its final decision while...

OBJECTS OF RESEARCH

Around the question: Who is the object in the Internet of Things?, the project explores the scenario in which artefacts in the house could not...

BLACK MIRROR

Black Mirror is a British television anthology series created by Charlie Brooker that features speculative fiction with dark and sometimes...

Proverbial Wallets

Sync to your smart phone and bank accounts and haptic actuators installed in the wallet itself to give physical feedback of your transaction activity and bank account balance.

One of the thought leaders in this category Julian Bleecker sums the topic up well in his essay "Design Fiction: A Short Essay on Design, Science, Fact and Fiction" with these words:

"I proposed “design fiction” in order to think about how design can tell thoughtful, speculative stories through objects.........I decided on fiction not so much to create objects that are for storytelling, but to create objects that help think through matters-of-concern. I am interested in working through materialized thought experiments. Design fictions are propositions for new, future things done as physical instantiations rather than future project plans done through PowerPoint."

"Smart, creative, imaginative ways of linking ideas to their materialization really do matter, because the future matters, and we will use whatever means possible to do create these better worlds, including the simultaneous deployment of science, fact, fiction and design."

Spotlights

Take a deeper look at the ideas behind these design fiction projects.

We tend to think of technological devices as neutral tools, available for humans to use in support of whatever value systems we subscribe to. But, as demonstrated by the recent debate over Apple’srefusal to help the FBI gain a back-door into encrypted iPhones, the design of our technology actually does embody ideology — supporting or resisting specific human infrastructures of ethics and power.

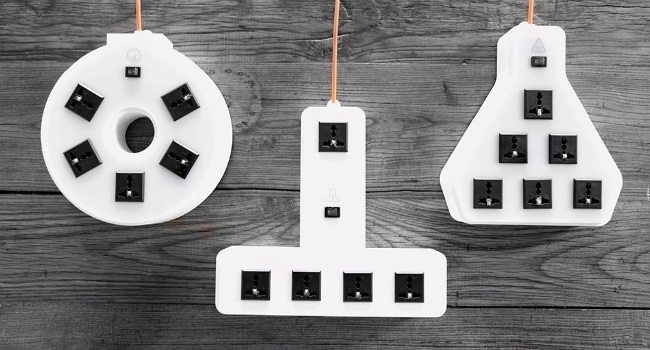

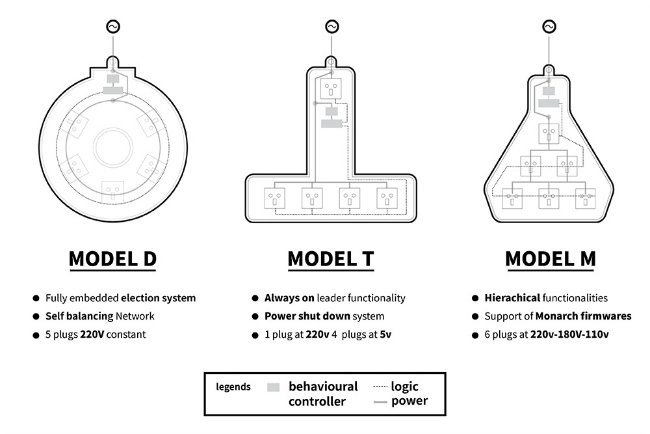

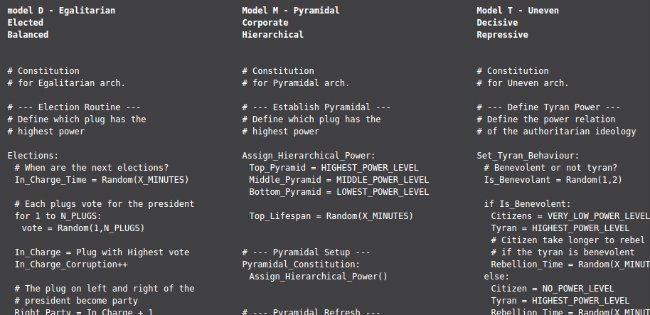

A new design fiction from Shanghai-based consultancy Automato applies this idea by playing on the dual meaning of “power”. Politics of Power is a set of three multi-plug outlets, each of which is designed to share electricity according to different hierarchies of control.

In the Model D, the outlets are arranged in a circle. When multiple devices are plugged in, the outlets periodically vote to delegate a leader, who gains a greater share of the electricity until the next election. If one outlets stays in charge to long it can become corrupt, but the system is essentially egalitarian because every outlet has the chance to be in charge from time to time.

The Model M is pyramidal, like a corporate flowchart. Electricity is distributed in a fixed hierarchy with the greatest share going to the “monarch” outlet at the top, middling shares going to the “support” tier, and the lowest share going to the “plebian” outlets on the bottom row. This structure also maintains order — the distribution of electricity remains stable without a monarch, but if all of the middle managers are removed then the current starts to fluctuate wildly.

Things are even less stable in the Model T, which is patterned after despotic or authoritarian governments. The one plug at the top always gets the lion’s share of electricity, and chooses at random between sharing a trickle of current down to its oppressed subjects, or keeping every last electron for itself. The citizen outlets periodically rebel, throwing the distribution into chaos until the tyrant can reestablish order. But if the top spot is left vacant for a while, the citizens will settle into an egalitarian mode like the Model D…until the head honcho is plugged in again.

Of course, these examples would be impractical for distributing electricity between appliances in a home or other real-world setting. But they demonstrate the power of technological design to enforce political realities.

Politics of Power offers a simplified way of understanding what’s at stake in debates over Net Neutrality, peer-to-peer networks, encryption back-doors, and other tech controversies. And as the world continues to fill homes and businesses with networked, power-hungry, “smart” devices, it forces us to wonder: Are the decision-making processes designed into the hardware, the software and the network itself supporting the ideologies and value systems that we want to trickle up to the human layer?

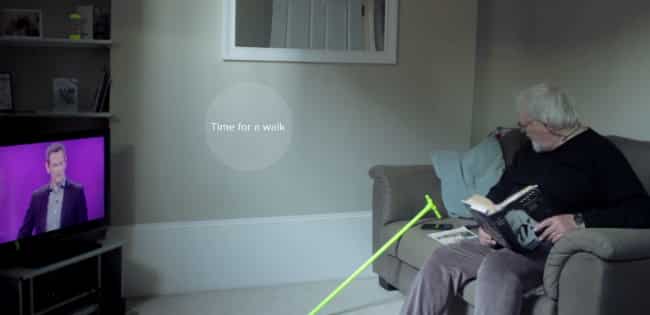

Uninvited Guests is the latest design fiction project commissioned by the interdisciplinary research group ThingTank. Created by design firm Superflux, it explores the way our relationship with technology is changing as our gadgets increasingly become nags and minders meant to improve our daily habits.

Specifically, Superflux focused in on home healthcare devices: smart objects and sensors that track everything from nutrition to exercise to sleep patterns. Many consumers are adopting these devices willingly in an attempt to improve their quality of life, but they are also being promoted as a way to remotely keep tabs on relatives who are elderly or in poor health.

The question is whether the person being monitored perceives each device as a benefit, or an electronic leash that takes away their control over their own life—and how that attitude changes their interaction with the technology.

In the short film accompanying Uninvited Guests, an elderly man has been saddled with a number of smart gadgets by his loving (but perhaps, in his eyes, overbearing) adult children. Over the course of three days, we see how his relationship with these devices evolves.

At first he uses them begrudgingly, disrupting his daily patterns every time a digital reminder prompts him to eat more vegetables or get up off the couch. Then he rejects the devices altogether, despite frequent messages from his concerned children. Finally, he invents ways to trick the devices’ sensors, and his family, so that he can go back to the routine he prefers—never mind the suboptimal health effects.

The film is a humorous but poignant reminder that technology doesn’t automatically create change or solve problems; we humans have to play an active and participatory role. The Superflux design team writes:

Whilst there are undeniable benefits to monitoring and tracking the elderly in their homes, we wanted to pause and reflect on some of the more complex human behaviours we are likely to encounter along the way. What are the messy, whimsical, unintended human behaviours that might collide with the one-size-fits-all ‘care’ that many smart devices are designed to deliver?

Saying Things That Can’t Be Said

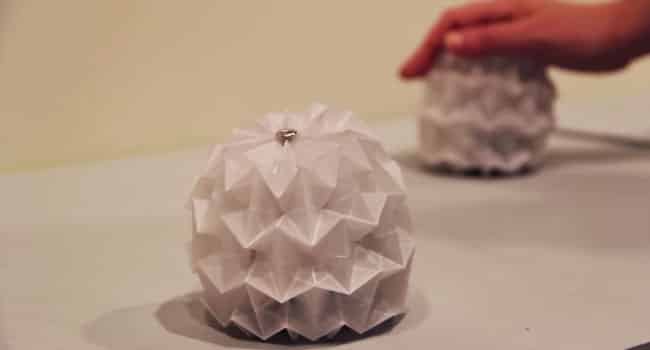

Technologies that connect computers often also connect people — from smilies to SnapChat, we have plenty of ways to communicate online, even nonverbally. But no digital technology can ever replace the subtle, unspoken signals we use to communicate in person, and that’s especially true for displays of affection. How can a tender touch, a warm hug, or a kiss be reduced to ones and zeros?

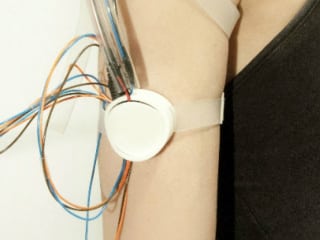

That’s the challenge Israeli design students Daniel Sher and Ben Hagin took on with their final project at Holon Institute of Technology. Saying Things That Can’t Be Said is a collection of networked objects that don’t recreate physical sensations — “Trying to imitate that will always feel fake,” Sher says — but instead capture the emotional content of human gestures in a playful, interactive way.

Among the objects are a pair of origami globes that, when held, pulsate in time with the heartbeat of the user on the other end; a paper butterfly that flutters when someone blows on a sensor-equipped flower; and a pinwheel that can be spun to make a paired device release a spray of soap bubbles.

Other devices for connecting long-distance loved ones take a rather straightforward approach to telepresence, attempting to make it feel like someone far away is really there with you. The list includes tame concepts like vibrating bracelets that transmit touch and pillows that glow when a partner goes to bed, as well as risque options like remote-controlled sex toys (possibly NSFW). Saying Things That Can’t Be Said, while unlikely to be mass-produced, demonstrates the possibilities for relationships to be maintained through more subtle interactions.

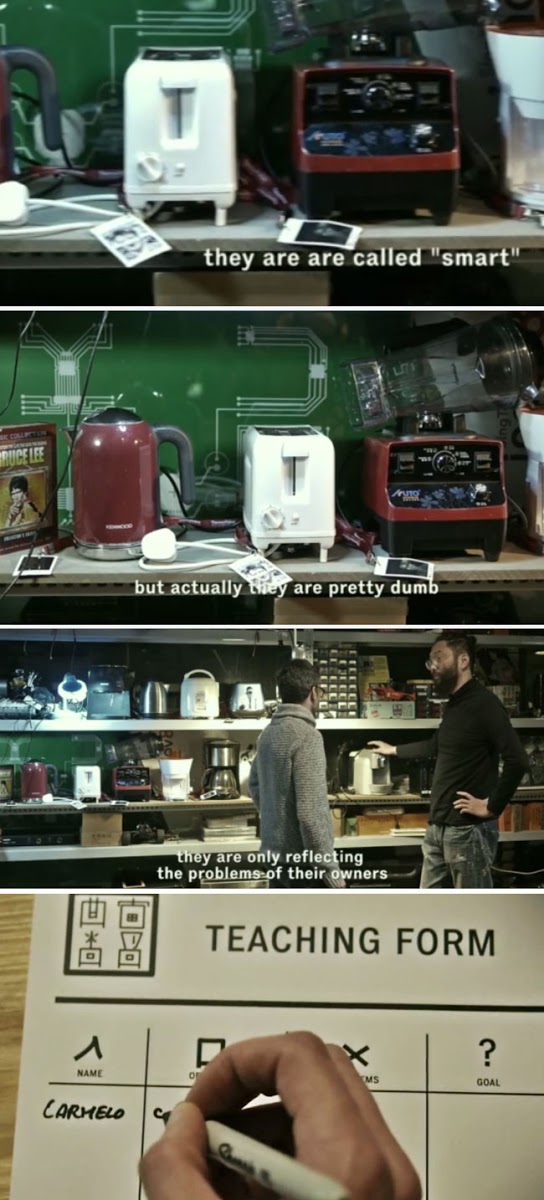

Machine Learning Design Fiction

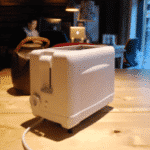

Teacher of Algorithms is the latest design fiction project from Simone Rebaudengo, who is quickly becoming the unofficial Philosopher Laureate of the Internet of Things. Rebaudengo’s previous work has focused on IoT devices that are addicted to being used and objects that can make ethical decisions by crowdsourcing input from humans around the world.

Teacher of Algorithms looks at another aspect of automated behavior: What do you do when your “smart” gadget’s machine learning algorithm doesn’t actually learn what you want it to?

In the video, the titular teacher runs what is essentially a kennel for connected devices. Like taking a dog to an animal trainer, people leave their misbehaving devices in his care. Then he uses his expert understanding of algorithmic “psychology” to design training exercises that teach each object to act in a way optimized for the owner’s habits and preferences—from operant conditioning with digital punishments and rewards, to simulation rooms that mimic the object’s home environment.

While the business model is still a little ways off in the real world, the concept isn’t that different from the old cliché about paying the neighbor’s kid to program your VCR. And it highlights one of the challenges for designers of “smart” products: A device that is capable of learning is great, but the burden is still on users to spend the time and effort to tailor its behavior to their own liking.

And with IoT devices becoming integrated ever more deeply into our daily routines and private lives, the stakes are accordingly higher. A device that latches onto the wrong pattern could cause all kinds of havoc or even create dangerous situations. As Rebaudengo writes, why should users have to “deal with the initial problems and risk that it might even learn wrongly?”

Teacher of Algorithms was commissioned by ThingTank, a collaboration between design, anthropology, and computer science researchers at several universities in Europe, Japan and the U.S.

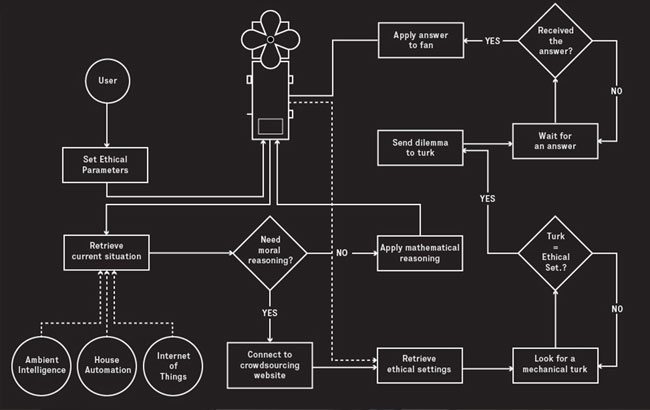

Crowdsourced “Moral” Machines

Whenever we talk about the Internet of Things in terms of “smart” devices, we’re talking about a certain level of artificial intelligence: The ability of objects to increasingly make independent decisions about their own behavior.

So far our devices mostly make choices based on logical or mathematical reasoning, and the decisions are deemed “intelligent” if they meet our needs as human users. Lights come on when we enter the room, dim when we start watching a movie, and go off when we climb into bed; thermostats factor humidity, weather patterns and sunlight levels into their algorithms and switch the heat on when we head home from work. The decisions may be complex, but the reasoning is straightforward: Do what the user wants.

But as smart devices become more pervasive and the systems they interact with become more intertwined throughout our lives, automated decisions start to have moral implications. Healthcare devices, self-driving cars and battlefield robots are just a few examples of objects whose choices can have life-or-death consequences. How should devices make decisions then?

Ethical Things is one attempt at an answer, from designers Matthieu Cherubini and Simone Rebaudengo (creator of the Addicted Toaster that won Postscapes’ 2014 Editors’ Choice IoT Award for Design Fiction). Their solution: Ask a human being what the object should do.

Playing on Amazon’s crowdsourced tasking platform Mechanical Turk, the practice of “Ethical Turking” involves programming objects to refer their moral quandaries to flesh-and-blood people, who are—at least in the eyes of some philosophers—hardwired for ethical reasoning.

The test case is a rotating fan that knows there are several people in the room, each of whom may be working hard or kicking back, thin or overweight, healthy or ill—and each has their own baseline temperature preference. How should the fan decide which person to focus on, and for how long? In exchange for a small online payment, a human Ethical Turk will analyze the situation and send back a decision, complete with a written explanation of their reasoning.

Of course, different people often draw on wildly different moral perspectives. The fan itself includes a number toggles to set the age, sex, education level and religion of the Ethical Turks it should seek out for guidance; but that’s no guarantee that the feedback it receives will be based in sound moral reasoning. In practice, many of the answers generated by the project reveal a bias against overweight people, while others spread the fan’s attention equally regardless of the preferences of those on whom it’s blowing.

The difficulty of finding a consistent—much less a “correct”—way of making the decision is exactly the point. By reminding us of how challenging moral reasoning is for human beings, Ethical Things demonstrates how hard it will be to create objects that can act morally on their own. And perhaps an even greater challenge will be to decide what counts as “moral” behavior for objects in the first place.

Object Agency

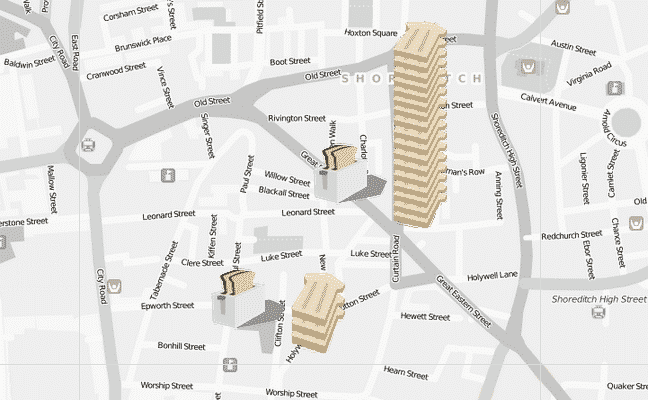

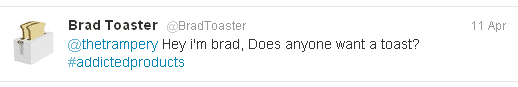

I would like to introduce you to Brad. He is a toaster and a member of a new breed of networked “addicted products” created by Simone Rebaudengo and Haque design+research that explore some new ground rules for their use:

- You don’t buy one, you ask for one, and try to convince it that you are a good candidate.

- You don’t own them, they test you, and decide if you are a good host.

- You don’t share them, they share themselves if they are not happy with you.

Today Brad is feeling “pretty useless compared to the other toasters” where he is sitting currently at the Pachube office and if they don’t start giving him a fix they could end up being placed on the networks “black toast list” and losing him to someone else better suited to his needs.

Follow along with the project developments at: http://designedaddictions.tumblr.com/ or see if you can convince Brad (@BradToaster) or one of his brothers and sisters to come over to your place at: http://www.addictedproducts.com/

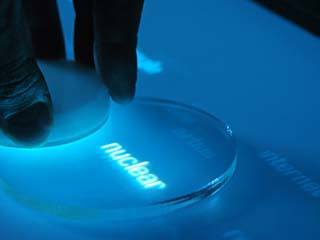

As part of Jessica Lee’s Art Center’s Media Design Practices/Lab program final thesis project she asks us “What do stocks, fortune cookies, Twitter, and uranium have in common?

Going by the title “Objective Devices” the project looks at the “paradigm shifts that are occurring in modern physics and proposes a design methodology that collapses the space between science, technology, design, and culture.”

The first device “ignorant decay” is a Geiger counter device that detects radioactive signals, produces an algorithm and trades on the open stock market based on the stochastic behavior of radioactive decay.

Created using a Sparkfun Geiger Counter, a Raspberry Pi and the TradeKing API the design fiction looks at how a “physical, random phenomena can effect stock decisions based on its stochastic behavior. The results are tracked and measured by visually representing the behavior of both the Uranium and current health of the stock market.”

Her other connected project in the series is “#fortunecookie” and plays off of the Schrödinger’s Catthought experiment that explores if a cat is may be both alive and dead depending on an earlier random event.

Using Twitter posts and fortune cookies to make the experiment physical, the design creates “an alternate vision where Twitter is providing a new view of our future selves by taking existing tweets (which represent an event that has happened in the past to someone else) and changing the language to create a fortune (which will represent an event that has the probability to happen in the future to the receiver of the fortune cookie).”

More of Lee’s work can be found at: Jumpandpoint.com, on her blog: Designerasdiscoverer.blogspot.com

History

A few favorites of the speculations and stories told about our digitally connected world from the past 40+ years.

1967: “1999 A.D” a Philco-Ford production starring Wink Martindale.

1968: The famous 2001: A Space Odyssey clip used most recently in a patent lawsuit between Samsung and Apple regarding tablet designs.

1969: A film created at the Post Office Research Station in Dollis Hill by BT Research. The vision includes a wood-cladded display that is capable of transmitting and receiving documents via a UV light and photo sensitive paper that I wouldn’t mind have sitting on top of my desk today.

1970’s: A commercial from Xerox in the 70’s (couldn’t track down exact date) showing off their Xerox Alto in an office setting of the future. Note: Ethernet was also developed at about the same time toconnect PARC’s Alto’s together.

1990: Mark Weiser and his team at Xerox PARC using their labs tabs, pads and boards. (via: bashford)

(Credit: PARC)

1992: A clip of Bruce Sterling waxing about electronic exploration and how “Hippies with computers are simply impossible to ignore.”

Related: Hear Kevin Slavin, Julian Bleecker, and Bruce Sterling tell their latest Thrilling Wonder Stories (Skip to 1/2 way point)

1993: A look at a series of AT&T “You Will” commercials narrated by Tom Selleck where he asks you if you have ever:

– Borrowed a book from 1000’s of miles away?

– Crossed the country without stopping for directions?

– Sent someone a fax from the beach?

2011: Finally for some perspective, this year’s glossy “Productivity Future Vision” from Microsoft:

Additional Links:

- Futureion: http://futureion.org

- http://future-drama.tumblr.com/

- Thrilling Wonder Stories: http://www.thrillingwonderstories.co.uk/

- Bret Victor: Brief rant on the future of interaction design

- Claire Rowland: Design beyond the glowing rectangle

- Julian Bleecker: Design fiction” talk from Lift 09