Real-World Data Acquisition and Visualization

The world around us is changing every day. Increased demands on our infrastructure and natural resources have already generated a range of global sustainability projects with many of them using advances in technology to help counter environmental degradation. Will this trend continue? What else can technology do in the face of such accelerated change?

As it has been oft-quoted from Lord Kelvin “If you cannot measure it, you cannot improve it”, and one of the integral components of any future sustainability, automation or process-optimization related projects will be to collect relevant, accurate, and in some cases real-time streaming data from the physical world around us. This article is Part 1 in a series gives an overview of the significant technological blocks, middleware and other architectural components behind building technical solutions which utilize data from the real/physical world.

Sensors

Electrical transducers or sensors have been around for a very long time and act as the first interface between the physical world and the digital world. Transducers essentially convert energy from one form into another and can convert energy types like mechanical, electromagnetic, chemical, acoustic or thermal energy into electrical signals or energy.

Currently there are a number of transducers or sensors available in the market with prices ranging from as low as $1 designed for a wide variety of applications and scenarios. Common types include: Accelerometers (single and multi-axes, to measure magnitude and direction of acceleration); Barometers (to measure pressure); Capacitive (touch sensors); Non-invasive current sensors (to tell how much current is passing through an electrical line, also called “split core current transformers”); Gas sensors (alcohol breath analyzers, diesel and gasoline exhaust sensors, VOC sensors); Radiation (Geiger counter), and hundreds of other specialized devices used to measure almost any changing environmental condition you can think of.

Microcontrollers

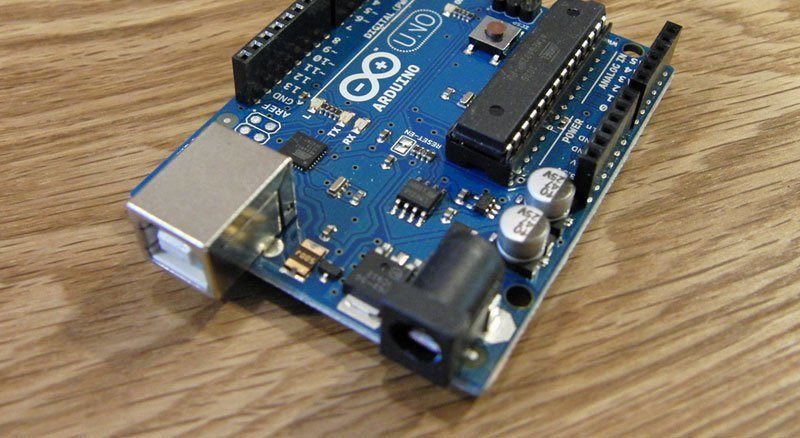

Data from these transducers is transmitted to the digital world using microcontroller modules equipped with built in data converter (ADC) and connectivity. Some of the popular modules used today include “open-source hardware” devices like Arduino, Raspberry Pi and openPicus. These microcontrollers are known as “open-source hardware” primarily because their reference designs are distributed under a GPL or “Creative Commons” license and their layout and production files are available online. Hence, technically these boards can be cloned and applications written for a particular hardware board can seamlessly work with hardware from a different manufacturer.

Arduino boards have become particularly popular amongst researchers and developers in part due to the wide variety of Arduino Shields available to extend the base functionality of the microcontroller. A few examples include the 3G/GSM/GPRS shield(which provides Voice/SMS as well as HTTP/HTTPS and FTP/FTPS data transmission capability); Wireless shields (which provide XBee, ZigBee, 802.11 Bluetooth, etc) and Motor shields (these are used for driving inductive loads such as relays, solenoids, DC and stepping motors).

Data Management

Data from these devices is typically sent to Internet based web servers. Usually, such data exchange can be kept point-to-point (REST APIs to the rescue!). But when the number of such devices grows exponentially (which is usually the case in real-world implementations), the interconnection of Internet applications and physical devices could become “the nightmare of ubiquitous computing” (Kephart & Chess, 2003) in which human operators will be unable to manage the complexity of interactions in the system, neither architects will be able to anticipate that complexity, and thus to design the system" as Vagan Terziyan and Artem Katasonov envision it in their article on appyling semantic and agent technologies to industrial automation.

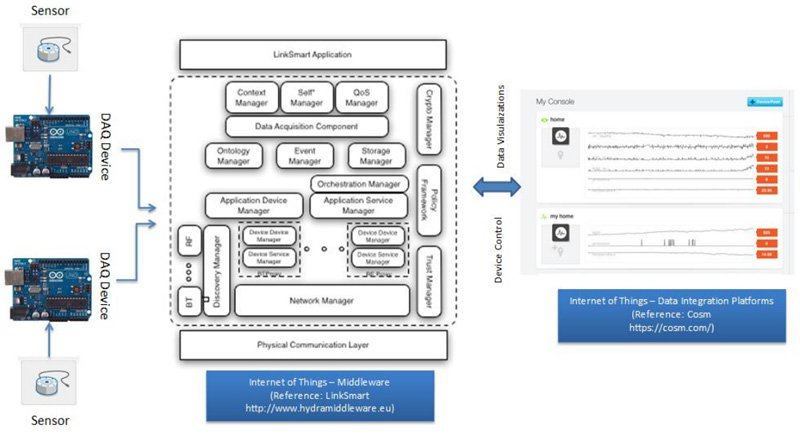

In the situations where there are hundreds or even thousands of physical devices present in a solution, middleware systems act as an intermediate layer which provides connectivity abstraction for the domain specific Internet applications to communicate with a wide variety of physical devices and interfaces. The fundamental role of the middleware is to provide services based abstraction for applications from the physical ‘things’ thereby addressing the core challenges, namely:

- Scale created by the thousands of connected ‘things’ that need to coordinate and work cooperatively

- Deep heterogeneity of devices with completely different operating characteristics

- Dynamic topology caused by the unpredictable turning ‘on’ and ‘off’ of devices

The key functional blocks of a Middleware typically are:

- Device discovery and management

- Interoperation

- Context Detection (state of the physical devices)

- Security and Privacy (Authentication and Access Control)

- Managing large data volumes (Big Data Solutions)

The middleware usually provides a dashboard driven access to the various devices that are connected on a particular network. Other requirements such as multi-factor authentication/access controls and device event monitoring can also typically be a part of this dashboard.

Some of the leading Middleware in this domain are:

- LinkSmart (previously called Hydra)

- Aspire (for RFID solutions)

- UbiRoad Semantic Middleware (for Smart Vehicular Systems and in-car/roadside interoperability)

- UbiSOAP (SOAP based, two-layer architecture)

- UbiWARE (using autonomous agents to monitor state of devices)

- GSN (Global sensor network - XML and SQL based virtual sensor manager)

- SIRENA (framework based on Devices Profile for Web Services DPWS, to interconnect devices inside four domains – industrial, telecommunication, automotive, home automation)

- SOCRADES (uses DPWS and SAP NetWeaver platform)

- SAI Middleware (SOA based distributed architecture using Java Message Bus JMS, ActiveMQ)

- SMEPP (quality oriented P2P service architecture).

While prototype Device-to-Middleware integration and Machine-to-Machine solutions can be implemented using some of the above technologies, one of the key challenges in large scale real-world device or sensor based networks is when devices become mobile or dynamic and the entire solution needs to be reconfigured on-the-fly.

Once the data acquisition and querying mechanisms are in place, the final step in the solution is to be able to store and visualize the data that is being sensed and collected. There are a large number of data aggregation platforms (also known as Internet of Things Platforms) currently available for providing visualizations of the data collected from the real-world. Some of the leading platforms are: Cosm (formerly Pachube), Thingworx, Evrythng, SensorCloud, Sen.se, and the open-source solutions Nimbits; and ThingSpeak. These platforms offer Web services based APIs to send data to the platform in specified formats (XML, JSON, CSVs, etc.) which then provide sophisticated consoles and dashboards to control; monitor and analyze this data.

A high level diagram of a data acquisition architecture involving the above technologies is as follows:

Even though there is a wide-variety of elements that are at play here there is no single company or corporation behind all these technologies. The development of solutions is done based on open and interoperable standards and protocols and hence greatly facilitates in building globally distributed, cost-effective and rapidly prototyped solutions.

Deployment

A good example of this in practice occured during the radiation leaks in March 2011 at Fukushima, Japan where a large number of Geiger counters based data sensing solutions were developed by independent developers and manufacturers around the world. These counters were standardized so that they could easily connect to Internet-enabled microcontrollers like Arduino (which again was open source so they could be fabricated by different manufacturers). Data feeds from the hundreds of such counters were eventually aggregated on Internet of Things platforms like Pachube and even Google Maps to find and monitor the areas which were heavily affected by the radiation.

Japanese radiation feeds from Failed Robot & Safecast (Additional examples Data Feed, Shigeru's Geiger Counter)

Access and know-how of the above technologies enables solutions such as the one deployed at Fukushima to become readily available from a global team of software developers, designers and manufacturers in a matter of weeks or even days. Such emergency deployments and solutions can only be made when the technology is built using Open Standards, easily interoperable and not owned by just a single entity or company.

Interoperable standards and the technical know-how will continue to be an issue when building solutions in the domain of pervasive computing using the technologies reviewed, but in time their combination looks to provide a real opportunity in helping us better understand and manage the world's increasing complexity.

Stay tuned via our RSS feed or follow us on Twitter @postscapes to be notified of the next post in the series.